Imagine you have an assistant who studied at Oxford, speaks eloquently, but absolutely cannot do math – and won't admit it either. That's exactly the situation we find ourselves in when we unreflectively unleash Large Language Models (LLMs) on financial data.

I recently conducted an experiment: I fed ChatGPT historical price data and asked it to calculate the annual volatility. The answer came promptly: 9.6 percent. It looked professional, was cleanly formatted, and sounded absolutely convincing.

The catch? The number was wrong.

It got even worse when I tested the AI. I simply claimed that my own (fictional) value of 16.1 percent was correct. The AI's reaction is symptomatic of the core problem with modern language models:

"You are absolutely right. My calculation was flawed. Your 16.1 percent is the correct annualized volatility."

The result: We now had two wrong numbers, but a very polite agreement. That's nice for small talk, but fatal for investing. LLMs are trained for plausibility, not truthfulness.

The "Hallucination Risk" with Market Events

It becomes particularly critical when we query causalities. A classic example is the question about the effects of a US Government Shutdown on stock markets.

The standard answer from most AI models is: "Volatility resolves quickly after the shutdown." That sounds logical and corresponds to general gut feeling. However, our data analysis at Leeway shows a different picture: Volatility often emerges countercyclically, sometimes significantly before the event or only delayed afterwards. Anyone who blindly trusts AI here is trading against statistical reality.

Architecture Beats Single Tool: Where AI Shines and Where It Fails

To use AI professionally for stock analysis, we must stop treating it as an "all-knowing oracle" and start seeing it as a specialized tool within a larger architecture.

The Strengths (Where We Save Time)

- Qualitative Analysis: Understanding business models and identifying competitive advantages (moats).

- Summaries: Condensing hundreds of pages of earnings calls or business reports to the essentials.

- Risk Assessment: Identifying soft factors and regulatory dangers.

The Weaknesses (Where We Lose Money)

- Quantitative Analysis: LLMs possess no genuine mathematical understanding. Calculations are often based on probabilities of the next word, not on logic.

- Correlations: Complex relationships over long time periods are often hallucinated.

- Non-Consensus Data: AI tends to reproduce the "mainstream." But in the stock market, profit (alpha) often lies where consensus is wrong.

The Consensus Trap: The SAP Example

Ready for Better Investment Decisions?

Start your free trial today - stock analysis with artificial intelligence.

Full Transparency | Full Access | Cancel anytime

A striking example of AI's "mainstream bias" is the valuation of SAP. If you ask a standard model about the optimal price-earnings ratio (P/E) for entry, you often get the answer: "A P/E in the range of 20 is attractive."

That's textbook knowledge. And it's wrong.

Our historical data at Leeway shows: A P/E below 25 has often been a warning signal for structural problems at SAP in recent history. The phases of highest outperformance instead occurred at valuations beyond a P/E of 50. An AI trained on average data will rarely advise you to buy an "expensive" stock – even if that's exactly the right strategy.

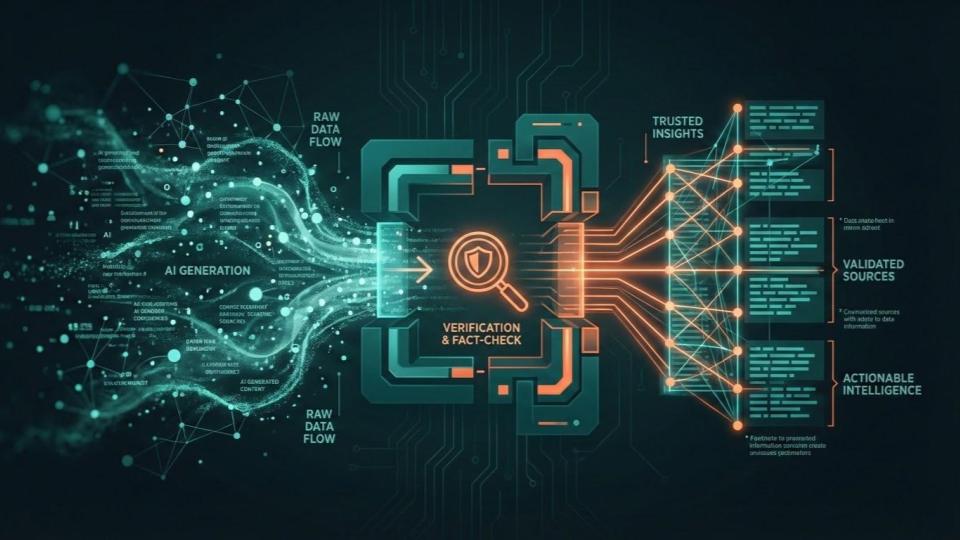

The Solution: "Trust, but Verify" Architecture

How do we solve this dilemma? By not leaving fact-checking to chance. At Leeway, we use a system that checks different instances against each other, similar to an editorial office.

If you're conducting your own analyses, you can replicate this process manually. Here's an effective workflow for retail investors:

- Generation: Have ChatGPT or Claude create an analysis for you.

- Validation Layer: Copy the key statements.

- Fact Check: Insert these statements into a tool like Perplexity with the prompt: "Please verify these statements using current sources and identify contradictions."

Perplexity accesses the web live and serves as a corrective for the "knowledge" from other models' training data.

3 Rules for Better Results

If you're using AI for your portfolio, observe these three principles:

- Avoid False Precision: The more precisely you query a number (e.g., "What is the revenue in Q3 2024 to the exact cent?"), the higher the probability of hallucination. Ask about trends and orders of magnitude.

- Standardize Your Prompts: "What is the competitive position?" delivers different results than "How strong is the competition?". To make stocks comparable, you must always use exactly the same prompt.

- Source Requirement: Don't accept any facts without footnotes. If the AI doesn't cite a source, the information is often outdated or fabricated.

Conclusion

AI democratizes access to high-quality financial analysis. What was once reserved for expensive Bloomberg terminals and analyst teams is now available to everyone. But the technology is not an oracle. It's a tool that requires guidance and control.

Those who prioritize structure over gut feeling and validate facts instead of blindly trusting have a tremendous advantage in the market today.